Injecting Quantum Noise into AI: My New Work at Kraftwerk Berlin

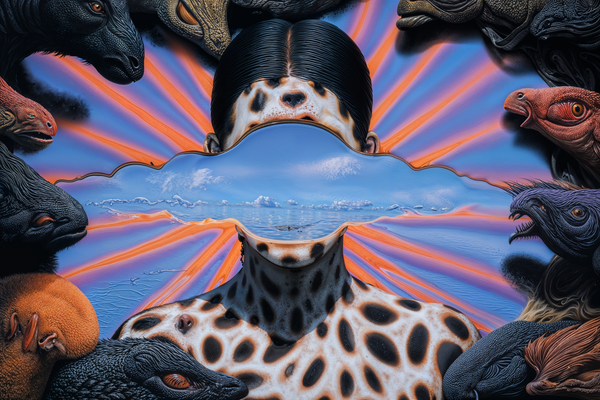

Exploring the unpredictable beauty of quantum randomness in an upcoming exhibition at Kraftwerk Berlin

Dear friends,

The past few weeks have been intense but exciting.

I’ve been deep into an experimental project that merges art, AI, and quantum computing—and now it’s time to share the first glimpses.

This project will be exhibited at Kraftwerk Berlin next week as part of a multi-sensory installation by Laure Prouvost, in collaboration with LAS Art Foundation.

On top of that, I’m also excited about a new collaboration with Artcamp, which we’ll be publishing soon. But let’s focus on Kraftwerk for now.

The Exhibition: A Multi-Sensory Experience

The show is a deep, immersive experience, incorporating video, sculpture, sound, and even scent. It opens on February 21st and runs until May 4th at Kraftwerk Berlin.

While I can’t show my work just yet, I can at least talk about it and give you some insight into the process behind it. You can find more details about the exhibition here.

Transforming Video & Sound with Quantum Noisee

For this project, I transformed Laure’s videos and sounds using Quantum Noise.

But what does that actually mean?

Quantum noise is data captured directly from a quantum computer’s unpredictable fluctuations—a kind of randomness connected to quantum mechanics.

Think of it like the schhhh-sound you hear on a radio signal, but on a subatomic level. Scientists usually try to eliminate this noise to make quantum computers more precise. But instead of fighting it, we injected it directly into Stable Diffusion.

How I Injected Quantum Noise into AI

We received a large dataset of quantum noise from Google Quantum Labs, and I used it to manipulate both image and audio generation in a way that has never been done before:

1. Quantum Noise in Image Generation

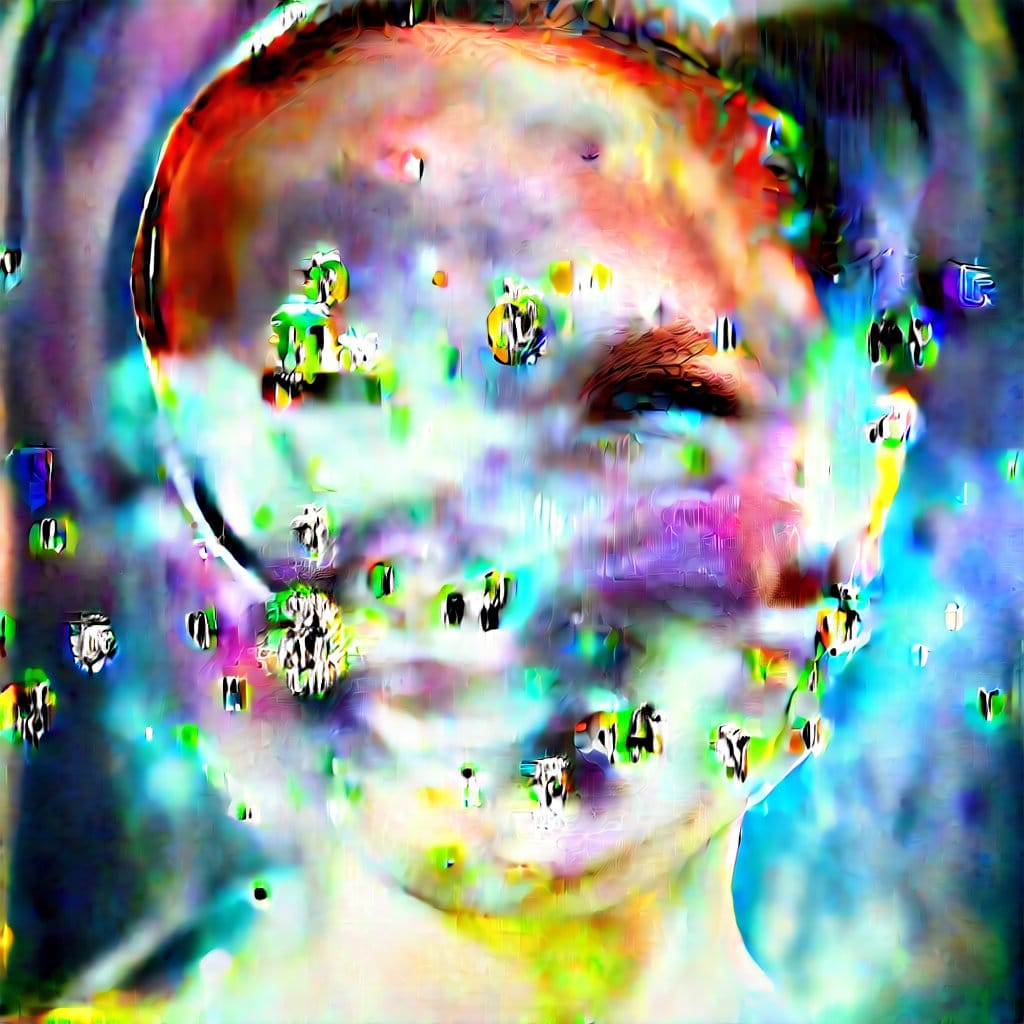

Stable Diffusion, the AI model I used, typically relies on random noise to generate images step by step. I dove deep into the image generation process and replaced its standard noise with quantum noise—leading to unpredictable, glitchy, and chaotic results.

2. Quantum Noise in Audio

Similarly, for sound, I used Stable Audio Diffusion and injected quantum noise into the process. The results were far from perfect, but that was the point—embracing randomness and allowing quantum physics to shape the digital world.

If you want to know more about noise in general, have a look my article here.

This felt like stepping into new territory—blurring the lines between AI, art, and quantum mechanics.

I plan to release a tutorial soon, explaining how you can inject your own noise into Stable Diffusion and diving deeper into how noise tensors, latent space, and denoising work. Super nerdy stuff though.

Come See It at Kraftwerk Berlin

If you’re in Berlin, come and see the show. The exhibition runs from February 21st to May 4th at Kraftwerk Berlin.

The glitchy videos and sound distortions—that’s my work, shaped by quantum noise.

More to come soon!

Thank you for reading.

Much love,

Marius