Innovating AI Art with Stable Diffusion and Deforum: The Power of Custom APIs

From Idea to Implementation: My Experience Developing an AI Animation Tool

Dear friends,

I spend my last nights developing a custom tool to control Stable Diffusion.

Stable Diffusion is an open source tool to generate AI images. And with plugins like Deforum it is possible to turn them into animations.

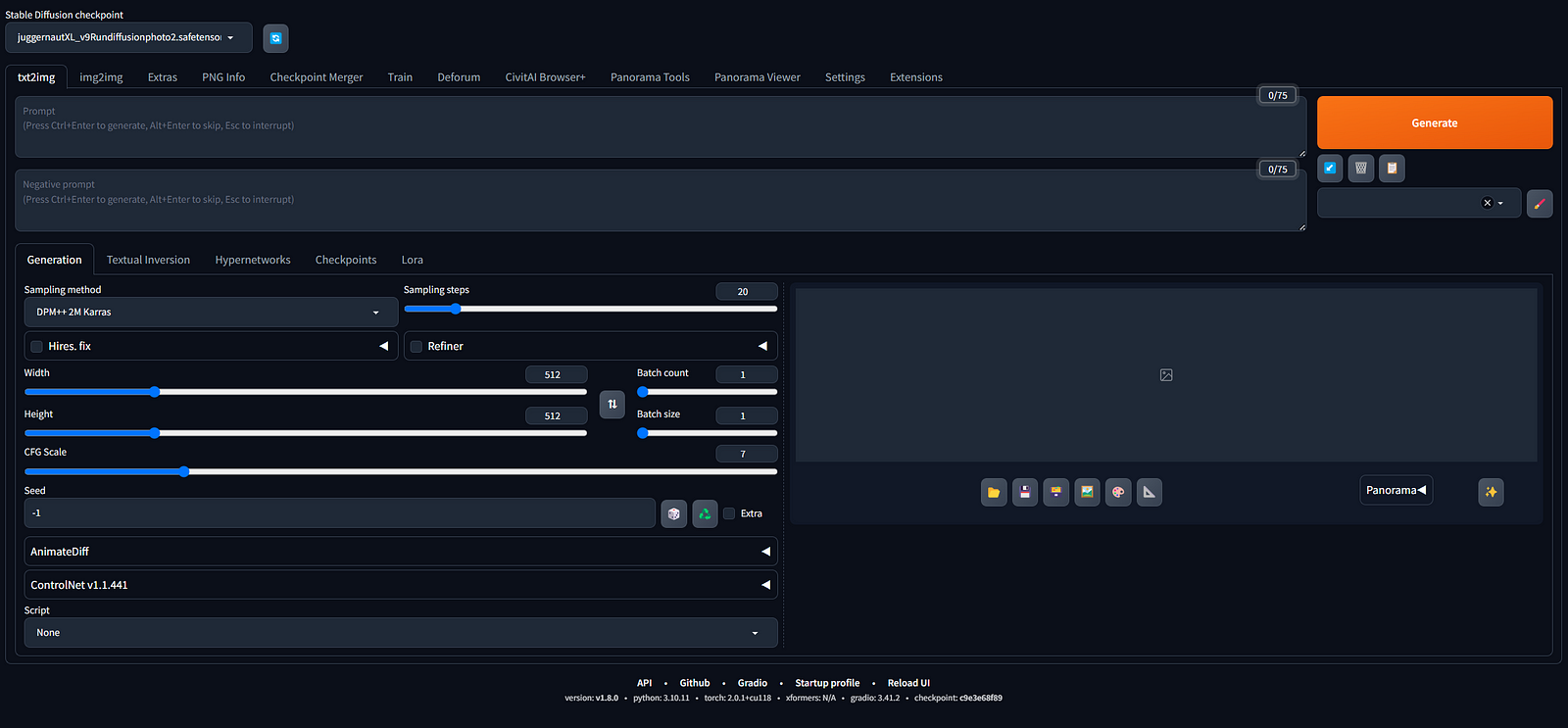

Generally Stable Diffusion gets controlled via a web-interface called Automatic1111 or ComfyUI. There you have hundreds of buttons and sliders to control the image or video which you want to create.

I was aware that you actually don’t need this interface and that you can control Stable Diffusion via an API. This means that you can control Stable Diffusion via an application which you develop / code yourself.

For my project in the Bundestag, this is exactly what I am doing.

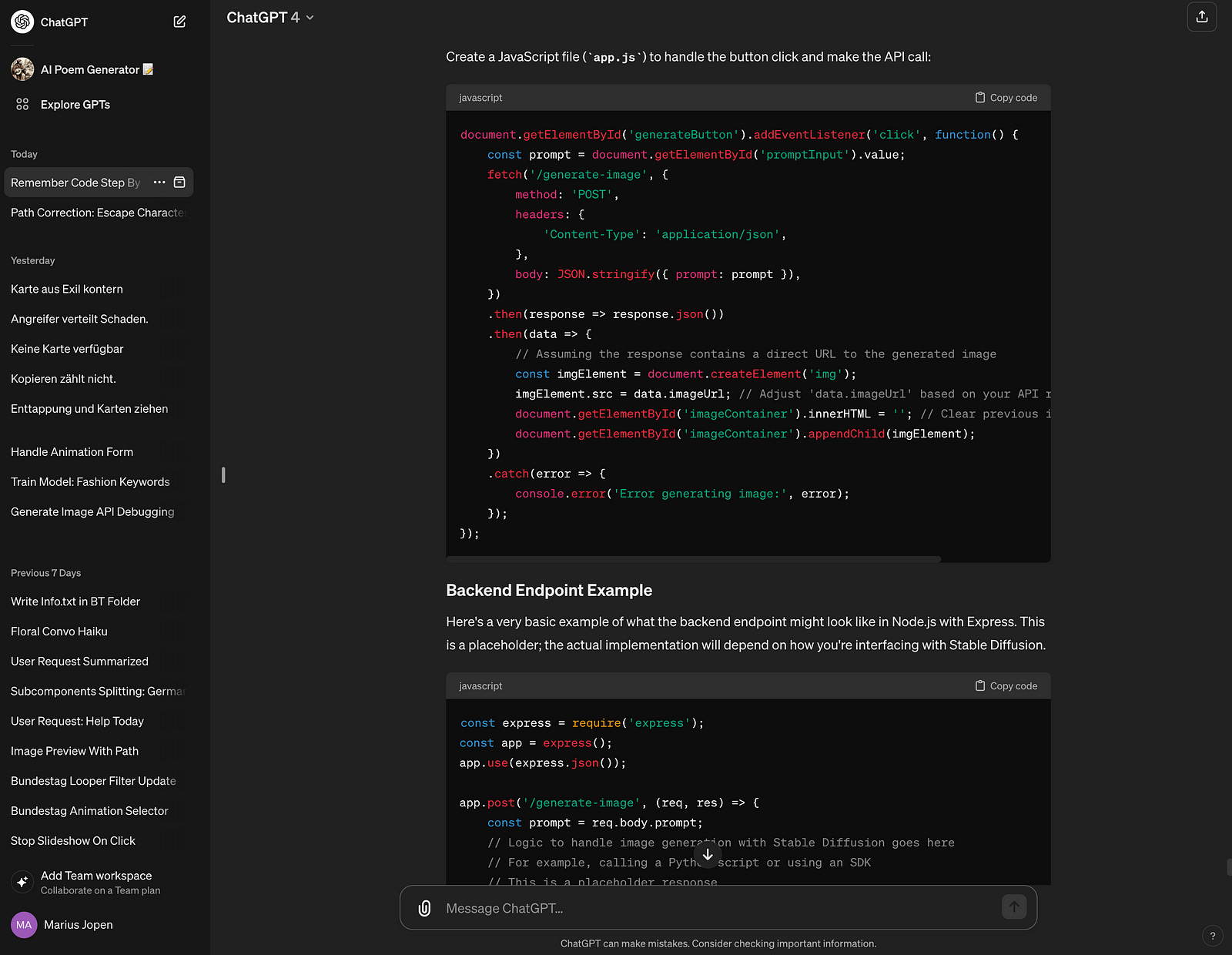

My friend Klaus Stille helped me to do the initial API connection to Stable Diffusion. And then with the help of ChatGPT4 I managed to program a complete application around it.

To use ChatGPT4 or other AI tools as a help for coding is a complete game-changer. Before I would have probably spend a couple of months figuring out how this all works. But now I managed to get it running within around 5 days.

You can just tell ChatGPT: Make me a textfield and a button, and when I click on the button, the API tells Stable Diffusion to generate an image.

And that works. It generates me this code. It’s like magic!

So if you are afraid of programming — don’t be. Just open ChatGPT and ask all the stupid questions. ChatGPT4 is recommended here.

So the tool which I developed can control Stable Diffusion and Deforum via an API. I programmed it in Javascript, with NodeJS and React. And like I said, ChatGPT did most of the work.

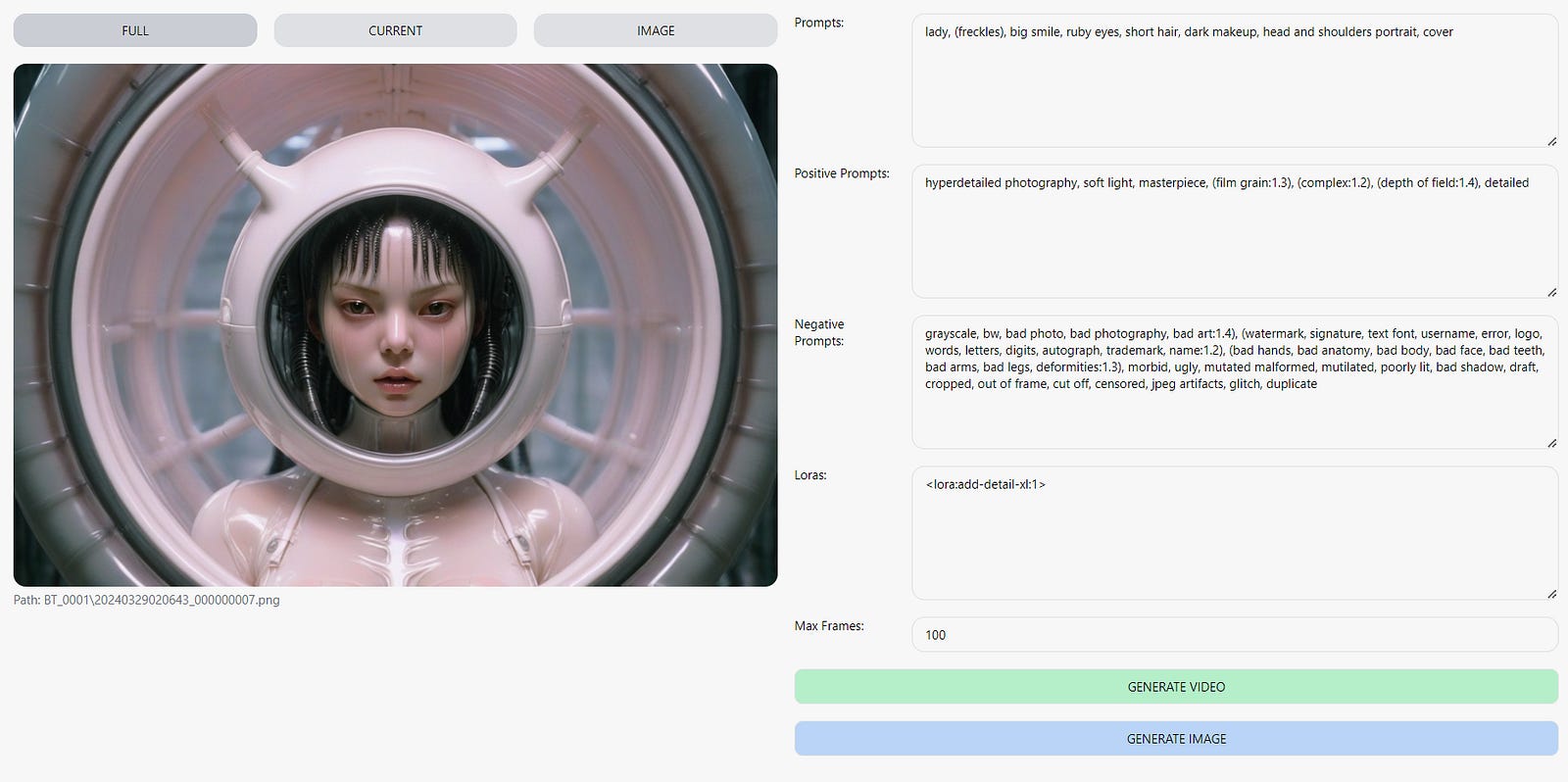

The application allows the user to enter a text prompt and hit a generate button. Then a video gets created.

When the user changes the text and hits the button again, the new video continues where the old video has stopped. Like this it is possible to create long videos step by step.

I am still amazed that it works.

I feel like everything is possible now. To control AI image and video generation via coding opens so many doors. And like I said, it is not difficult.

In a couple of weeks I plan to release my tool and code, so other people can use it. Maybe I could even make a real app out of it, that people can use it online.

I hope that this quite long and nerdy article was interesting for some of you. And I actually just scratched the surface…

That’s that.

Much love!

Marius

Thanks for reading Marius Jopen! Subscribe for free to receive new posts and support my work.